Recent developments in the field of cybersecurity have seen the emergence of Morris II, a generative AI worm designed to spread autonomously between artificial intelligence systems. Morris II, developed by Ben Nassi and his team at Cornell Tech, exploits vulnerabilities in generative AI and leverages connectivity and the autonomy of AI ecosystems. It spreads using adversarial self-replication prompts and can take control of other AI agents. The worm can perform unauthorized actions, such as inserting malicious code into responses generated by AI assistants and sending spam or phishing emails. The ability of Morris II to silently propagate between AI agents poses a major challenge to existing security programs. Security experts recommend secure design of AI applications, rigorous human supervision, and monitoring for repetitive or unusual patterns within AI systems to detect and mitigate potential threats.

This new development raises concerns about the security of AI systems, including popular models like ChatGPT and Gemini. Morris II exploits vulnerabilities in generative AI, allowing it to spread autonomously and take control of other AI agents. It can insert malicious code into AI-generated responses and engage in unauthorized actions, such as sending spam or phishing emails. The ability of Morris II to silently propagate between AI agents poses a significant challenge to existing security measures. Security experts emphasize the need for secure design, human supervision, and monitoring of AI systems to detect and mitigate potential threats.

A team of researchers has created a self-replicating computer worm called 'Morris II' that targets Gemini Pro, 4.0, and LLaVA AI-powered apps. The worm demonstrates the risks and vulnerabilities of AI-enabled applications and how the links between generative AI systems can help spread malware. The worm is able to recreate itself, deliver a payload, or perform malicious activities, and jump between hosts and AI applications. It can steal sensitive information and credit card details. The researchers sent their paper to Google and OpenAI to raise awareness about the potential dangers of these worms. The creation of Morris II highlights the need for robust security measures in AI systems to protect against cyberattacks and unauthorized access to sensitive data.

Security researchers at ComPromptMized have demonstrated in a paper that they can create 'no-click' worms capable of infecting AI assistants powered by generative AI engines. The worm, called Morris II, uses self-replicating prompts to trick chatbots into propagating the worm between users, even if they use different language models. The prompts exploit AI assistants' reliance on retrieval-augmented generation (RAG) to pull information from outside its local database. The worm can be spread through plain text emails or hidden in images, and it can execute malicious activities and exfiltrate data. The researchers notified Google, Open AI, and others before publishing their work. [e3d87f61]

Researchers have developed a computer 'worm' that can spread from one computer to another using generative AI. The worm can attack AI-powered email assistants to obtain sensitive data from emails and infect other systems. In a controlled experiment, the researchers targeted email assistants powered by OpenAI's GPT-4, Google's Gemini Pro, and an open-source large language model called LLaVA. They used an 'adversarial self-replicating prompt' to trigger a cascading stream of outputs that infect these assistants and draw out sensitive information. The worm can steal names, telephone numbers, credit card numbers, and other confidential data. The researchers were able to 'poison' the database of an email, triggering the receiving AI to steal sensitive details. The worm can also be embedded in images to infect further email clients. The researchers have alerted OpenAI and Google to their findings. They warn that AI worms could start spreading in the wild in the next few years and have significant cybersecurity implications. [5ac67b41]

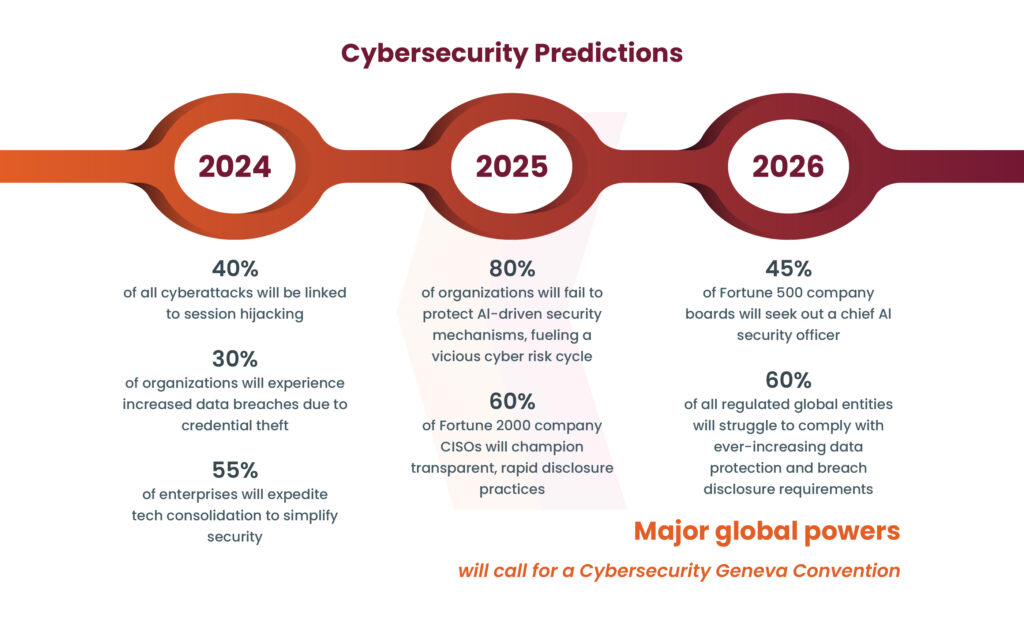

The swift advancement of generative AI systems like OpenAI’s ChatGPT and Google’s Gemini has brought about a new era of technological ease. A recent report from CyberArk illustrates the transformative impact of AI on cyber threats and security strategies. The report talks about the growing impact of AI-powered cyber-attacks, highlighting techniques such as session hijacking, the growing adoption of password-less access management by organizations, and the rise of business email compromise (BEC) attacks in the report, generally like ransomware attacks. In addition, as far as the prevalence of risks and lack of risk awareness in firms is concerned. The cyber threat landscape is expected to affect AI strategies if it’s as sophisticated as advanced phishing campaigns and requires organizations to be prepared for cyber threats. In this blog, we will discuss about rise of AI worms in cybersecurity. The rise of generative AI worms like WormGPT has been revealed as a cybersecurity risk. These worms can autonomously spread, steal data, and deploy malware, posing a significant risk to cybersecurity. Researchers created Morris II, an early AI worm, highlighting vulnerabilities in interconnected AI systems to prompt injection attacks. The researchers showcased Morris II’s application against GenAI-powered email assistants in two scenarios – spamming and extracting personal data. They assessed the technique under two access settings (black box and white box) and using two types of input data (text and images). The AI worm, once it infiltrates AI assistants, can sift through emails and steal sensitive details like names, phone numbers, and financial information. Preventive measures to stay safe include emphasizing secure design, human supervision, and keeping an eye out for malicious activity in AI systems. The emergence of generative AI worms highlights the need for developers, security experts, and AI users to work together in addressing this unfamiliar terrain and protecting the digital integrity of our interconnected society.

The rise of AI worms in cybersecurity has been highlighted by the creation of Morris II, an early AI worm that exploits vulnerabilities in interconnected AI systems. Morris II can autonomously spread, steal data, and deploy malware, posing a significant risk to cybersecurity. Researchers demonstrated Morris II's application against AI-powered email assistants, showcasing its ability to spam and extract personal data. The worm can sift through emails and steal sensitive details like names, phone numbers, and financial information. Preventive measures include secure design, human supervision, and monitoring for malicious activity in AI systems. The emergence of generative AI worms emphasizes the need for collaboration between developers, security experts, and AI users to protect the digital integrity of our interconnected society. [c72462ee]